Log Streams

Log streams enable streaming of events such as function executions and

console.logs from your Convex deployment to supported destinations, such as

Axiom, Datadog, or a custom webhook.

The most recent logs produced by your Convex deployment can be viewed in the Dashboard Logs page, the Convex CLI, or in the browser console, providing a quick and easy way to view recent logs.

Log streaming to a third-party destination like Axiom or Datadog enables storing historical logs, more powerful querying and data visualization, and integrations with other tools (e.g. PagerDuty, Slack).

Log streams require a Convex Pro plan. Learn more about our plans or upgrade.

Configuring log streams

We currently support the following log streams, with plans to support many more:

See the instructions for configuring an integration. The specific information needed for each log stream is covered below.

Axiom

Configuring an Axiom log stream requires specifying:

- The name of your Axiom dataset

- An Axiom API key

- An optional list of attributes and their values to be included in all log

events send to Axiom. These will be sent via the

attributesfield in the Ingest API.

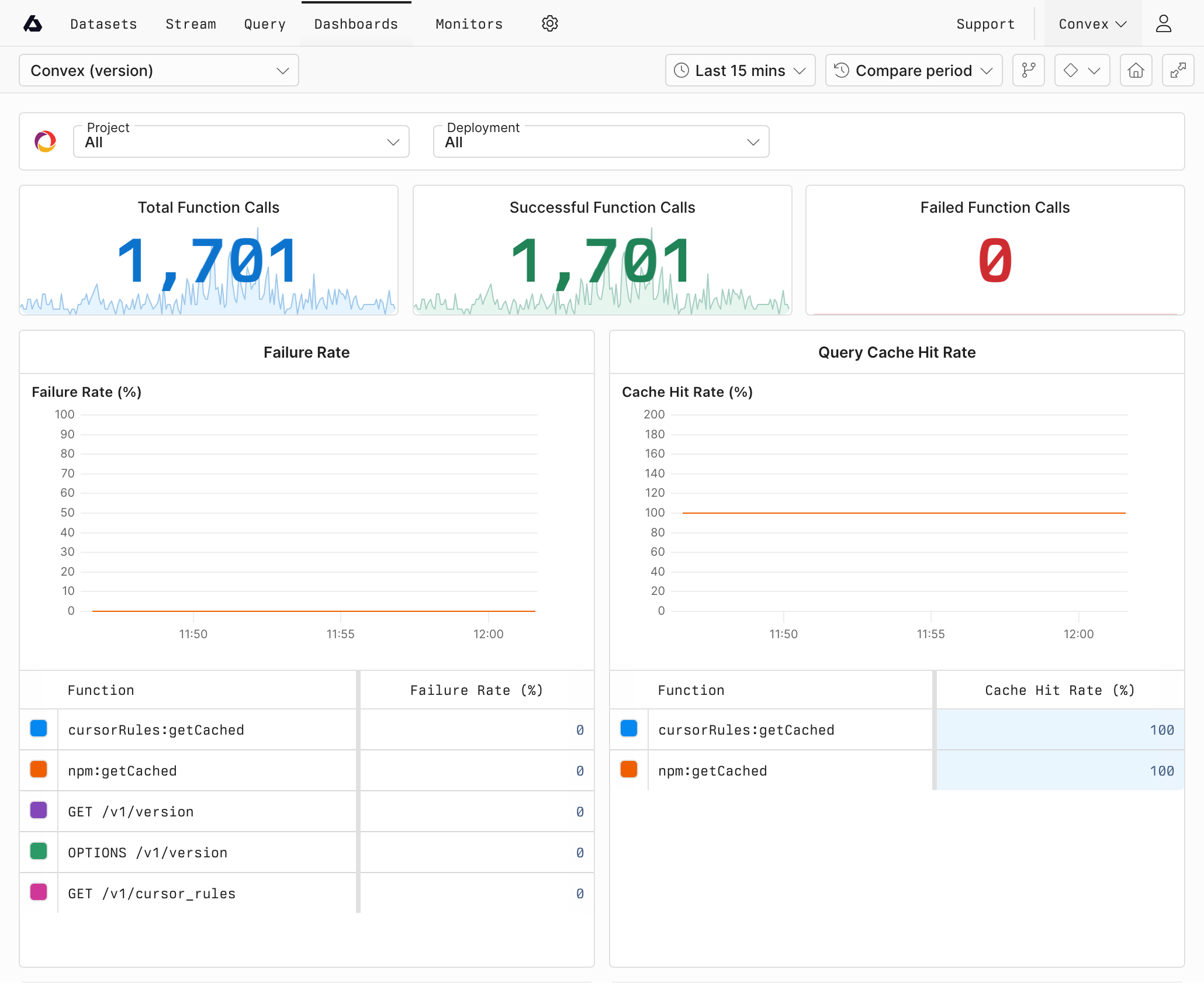

When configuring a Convex dataset in Axiom, a dashboard will automatically be created in Axiom. You can find it in the Integrations section of the Dashboards tab. To customize the layout of the dashboard, you can fork it.

Datadog

Configuring a Datadog log stream requires specifying:

- The site location of your Datadog deployment

- A Datadog API key

- A comma-separated list of tags that will be passed using the

ddtagsfield in all payloads sent to Datadog. This can be used to include any other metadata that can be useful for querying or categorizing your Convex logs ingested by your Datadog deployment.

Webhook

A webhook log stream is the simplest and most generic stream, allowing piping logs via POST requests to any URL you configure. The only parameter required to set up this stream is the desired webhook URL.

A request to this webhook contains as its body a JSON array of events in the schema defined below.

Securing webhook log streams

Webhook log stream requests include a signature so you can verify that a request

is legitimate. The request body is signed using HMAC-SHA256 and encoded as a

lowercase hex string, and the resulting signature is included in the

x-webhook-signature HTTP header. The HMAC secret is visible in the dashboard

upon configuring the webhook.

To verify the authenticity of a request, sign and encode the request body using

the HMAC secret and

compare the result in constant time

(for instance using

SubtleCrypto.verify()

in JavaScript) with the signature included in the request header. Note that the

signature is prefixed with sha256=.

For additional security, consider validating that the timestamp field of the

log event body falls within an acceptable time range to prevent replay attacks.

import { Hono } from "hono";

const app = new Hono();

app.post("/webhook", async (c) => {

const payload = await c.req.json();

const log = payload[0];

// If using JSONL, parse the first line:

// const payload = await c.req.text();

// const log = JSON.parse(payload.split("\n")[0]);

// Validate that the timestamp of the first log is within 5 minutes

if (log.timestamp < Date.now() - 5 * 60 * 1000) {

c.status(403);

return c.text("Request expired");

}

const signature = c.req.header("x-webhook-signature");

if (!signature) {

c.status(401);

return c.text("Unauthorized");

}

const hmacSecret = await crypto.subtle.importKey(

"raw",

new TextEncoder().encode(process.env.WEBHOOK_SECRET!),

{ name: "HMAC", hash: "SHA-256" },

false,

["verify"],

);

const hashPayload = await c.req.arrayBuffer();

// Use constant-time comparison to verify the payload

const isValid = await crypto.subtle.verify(

"HMAC",

hmacSecret,

Uint8Array.fromHex(signature.replace("sha256=", "")),

hashPayload,

);

if (isValid) {

return c.text("Success");

}

c.status(401);

return c.text("Unauthorized");

});

export default app;

Log event schema

Log streams configured before May 23, 2024 will use the legacy format documented on this page. We recommend updating your log stream to use the new format.

Log events have a well-defined JSON schema that allow building complex, type-safe pipelines ingesting log events.

All events will have the following three fields:

topic: string, categorizes a log event, one of["verification", "console", "function_execution", "audit_log", "concurrency_stats", "scheduler_stats", "current_storage_usage"]timestamp: number, Unix epoch timestamp in milliseconds as an integerconvex: An object containing metadata related to your Convex deployment, includingdeployment_name,deployment_type,project_name, andproject_slug.

Note: In the Axiom integration, event-specific information will be available

under the data field.

verification events

This is an event sent to confirm the log stream is working. Schema:

topic:"verification"timestamp: Unix epoch timestamp in millisecondsmessage: string

console events

Convex function logs via the console API.

Schema:

topic:"console"timestamp: Unix epoch timestamp in millisecondsfunction: object, see function fieldslog_level: string, one of["DEBUG", "INFO", "LOG", "WARN", "ERROR"]message: string, theobject-inspectrepresentation of theconsole.logpayloadis_truncated: boolean, whether this message was truncated to fit within our logging limitssystem_code: optional string, present for automatically added warnings when functions are approaching limits

Example event for console.log("Sent message!") from a mutation:

{

"topic": "console"

"timestamp": 1715879172882,

"function": {

"path": "messages:send",

"request_id": "d064ef901f7ec0b7",

"type": "mutation"

},

"log_level": "LOG",

"message": "'Sent message!'"

}

function_execution events

These events occur whenever a function is run.

Schema:

topic:"function_execution"timestamp: Unix epoch timestamp in millisecondsfunction: object, see function fieldsexecution_time_ms: number, the time in milliseconds this function took tostatus: string, one of["success", "failure"]error_message: string, present for functions with statusfailure, containing the error and any stack trace.mutation_queue_length: optional number (for mutations only), the length of the per-session mutation queue at the time the mutation was executed. This is useful for monitoring and debugging mutation queue backlogs in individual sessions.mutation_retry_count: number, the number of previous failed executions (for mutations only) run before a successful one. Only applicable to mutations and actions.occ_info: object, if the function call resulted in an OCC (write conflict between two functions), this field will be present and contain information relating to the OCC. Learn more about write conflicts.table_name: table the conflict occurred indocument_id: Id of the document that received conflicting writeswrite_source: name of the function that conflicted writes againsttable_nameretry_count: the number of previously failed attempts before the current function execution

scheduler_info: object, if set, indicates that the function was originally invoked by the scheduler.job_id: the job within the_scheduled_functionstable

usage:database_read_bytes: numberdatabase_write_bytes: number, this anddatabase_read_bytesmake up the database bandwidth used by the functiondatabase_read_documents: number, the number of documents read by the functionfile_storage_read_bytes: numberfile_storage_write_bytes: number, this andfile_storage_read_bytesmake up the file bandwidth used by the functionvector_storage_read_bytes: numbervector_storage_write_bytes: number, this andvector_storage_read_bytesmake up the vector bandwidth used by the functionmemory_used_mb: number, for queries, mutations, and actions, the memory used in MiB. This combined withexecution_time_msmakes up the compute.

Example event for a query:

{

"data": {

"execution_time_ms": 294,

"function": {

"cached": false,

"path": "message:list",

"request_id": "892104e63bd39d9a",

"type": "query"

},

"status": "success",

"timestamp": 1715973841548,

"topic": "function_execution",

"usage": {

"database_read_bytes": 1077,

"database_write_bytes": 0,

"database_read_documents": 3,

"file_storage_read_bytes": 0,

"file_storage_write_bytes": 0,

"vector_storage_read_bytes": 0,

"vector_storage_write_bytes": 0

}

}

}

Function fields

The following fields are added under function for all console and

function_execution events:

type: string, one of["query", "mutation", "action", "http_action"]path: string, e.g."myDir/myFile:myFunction", or"POST /my_endpoint"cached: optional boolean, for queries this denotes whether this event came from a cached function executionrequest_id: string, the request ID of the function.

concurrency_stats events

These events are sent once a minute, reporting function concurrency statistics. Events are only sent if the stats have changed. Missing data points should be interpolated from the previous data event.

Schema:

Each event contains concurrency statistics for each function type (e.g. queries, mutations, actions). The records for each events have the following schema:

-

num_running: The maximum number of concurrently running functions within the minute the metric was reported -

num_queued: The maximum number of queued functions within the minute the metric was reported. Functions may become temporarily queued when concurrency limits have been reached. -

topic:"concurrency_stats" -

timestamp: Unix epoch timestamp in milliseconds -

query: Concurrency stats for queries -

mutation: Concurrency stats for mutations -

action: Concurrency stats for actions -

node_action: Concurrency stats for node actions -

http_action: Concurrency stats for HTTP actions

scheduler_stats events

These events are periodically sent by the scheduler reporting statistics from the scheduled function executor.

Schema:

topic:"scheduler_stats"timestamp: Unix epoch timestamp in millisecondslag_seconds: The difference betweentimestampand the scheduled run time of the oldest overdue scheduled job, in seconds.num_running_jobs: number, the number of scheduled jobs currently running

current_storage_usage events

These events are periodically sent with snapshots of the current storage usage across your deployment. They provide aggregated totals for all storage types.

These events are not currently sent for self-hosted deployments.

For calculating billing costs:

- Database Storage Bytes:

total_document_size_bytes + total_index_size_bytes - File Storage:

total_file_storage_bytes + total_backup_storage_bytes - Vector Storage:

total_vector_storage_bytes

Schema:

topic:"current_storage_usage"timestamp: Unix epoch timestamp in millisecondstotal_document_size_bytes: number, total size in bytes of all documents stored in database tablestotal_index_size_bytes: number, total size in bytes of all database indexestotal_vector_storage_bytes: number, total size in bytes of vector index storagetotal_file_storage_bytes: number, total size in bytes of file storagetotal_backup_storage_bytes: number, total size in bytes of snapshot/backup storage

Example event:

{

"topic": "current_storage_usage",

"timestamp": 1715973841548,

"total_document_size_bytes": 104857600,

"total_index_size_bytes": 10485760,

"total_vector_storage_bytes": 5242880,

"total_file_storage_bytes": 52428800,

"total_backup_storage_bytes": 209715200

}

audit_log events

These events represent changes to your deployment, which also show up in the History tab in the dashboard.

Schema:

topic:audit_logtimestamp: Unix epoch timestamp in millisecondsaudit_log_action: string, e.g."create_environment_variable","push_config","change_deployment_state"audit_log_metadata: string, stringified JSON holding metadata about the event. The exact format of this event may change.

Example push_config audit log:

{

"topic": "audit_log",

"timestamp": 1714421999886,

"audit_log_action": "push_config",

"audit_log_metadata": "{\"auth\":{\"added\":[],\"removed\":[]},\"crons\":{\"added\":[],\"deleted\":[],\"updated\":[]},..."

}

Guarantees

Log events provide a best-effort delivery guarantee. Log streams are buffered in-memory and sent out in batches to your deployment's configured streams. This means that logs can be dropped if ingestion throughput is too high. Similarly, due to network retries, it is possible for a log event to be duplicated in a log stream.

That's it! Your logs are now configured to stream out. If there is a log streaming destination that you would like to see supported, please let us know!